Multi-Agent Retrieval-Augmented Framework for Evidence-Based Counterspeech Against Health Misinformation

Published in Second Conference on Language Modeling, 2025

Abstract

Large Language Models (LLMs) integrated with Retrieval-Augmented Generation (RAG) have shown strong potential in generating counterspeech against misinformation. However, existing approaches often rely on limited evidence and provide little control over the quality of generated responses.

We introduce a Multi-Agent Retrieval-Augmented Framework for generating evidence-based counterspeech targeting health misinformation. The framework orchestrates multiple LLM agents for evidence retrieval, summarization, generation, and refinement, leveraging both static (curated guidelines) and dynamic (real-time web) sources. This design ensures counterspeech that is timely, accurate, and contextually appropriate.

Our system outperforms single-agent and traditional RAG baselines across multiple metrics, including Politeness (0.88), Relevance (0.70), Informativeness (0.78), and Factual Accuracy (0.86). Human evaluations further validate that responses from our framework are consistently preferred over baseline outputs.

Keywords: Large Language Models, Multi-Agent Systems, Retrieval-Augmented Generation, Health Misinformation, Counterspeech

Dataset

We release a curated Reddit Health Misinformation Dataset to support further research:

👉 Health Misinformation Reddit Dataset

- Topics Covered: COVID-19, Influenza (Flu), HIV misinformation posts

- Annotation: Classifier-assisted labeling + expert validation

- License: Creative Commons Attribution 4.0 International (CC BY 4.0)

This dataset serves as a benchmark for misinformation detection and counterspeech generation.

Contributions

Our main contributions are:

- Multi-Agent Framework for Counterspeech Generation

- Modular pipeline with specialized agents for retrieval, summarization, generation, and refinement.

- Achieves superior performance across metrics: Politeness (0.88), Relevance (0.70), Informativeness (0.78), and Factual Accuracy (0.86).

- Integration of Static + Dynamic Knowledge Sources

- Combines trusted medical guidelines with real-time web evidence for both authority and timeliness.

- Ablation Studies & Prompting Comparisons

- Demonstrates the role of each agent and compares prompting strategies (e.g., guided prompting vs. chain-of-thought).

- Public Dataset Release

- Introduces a high-quality dataset on health misinformation, publicly released to advance future research.

Method Overview

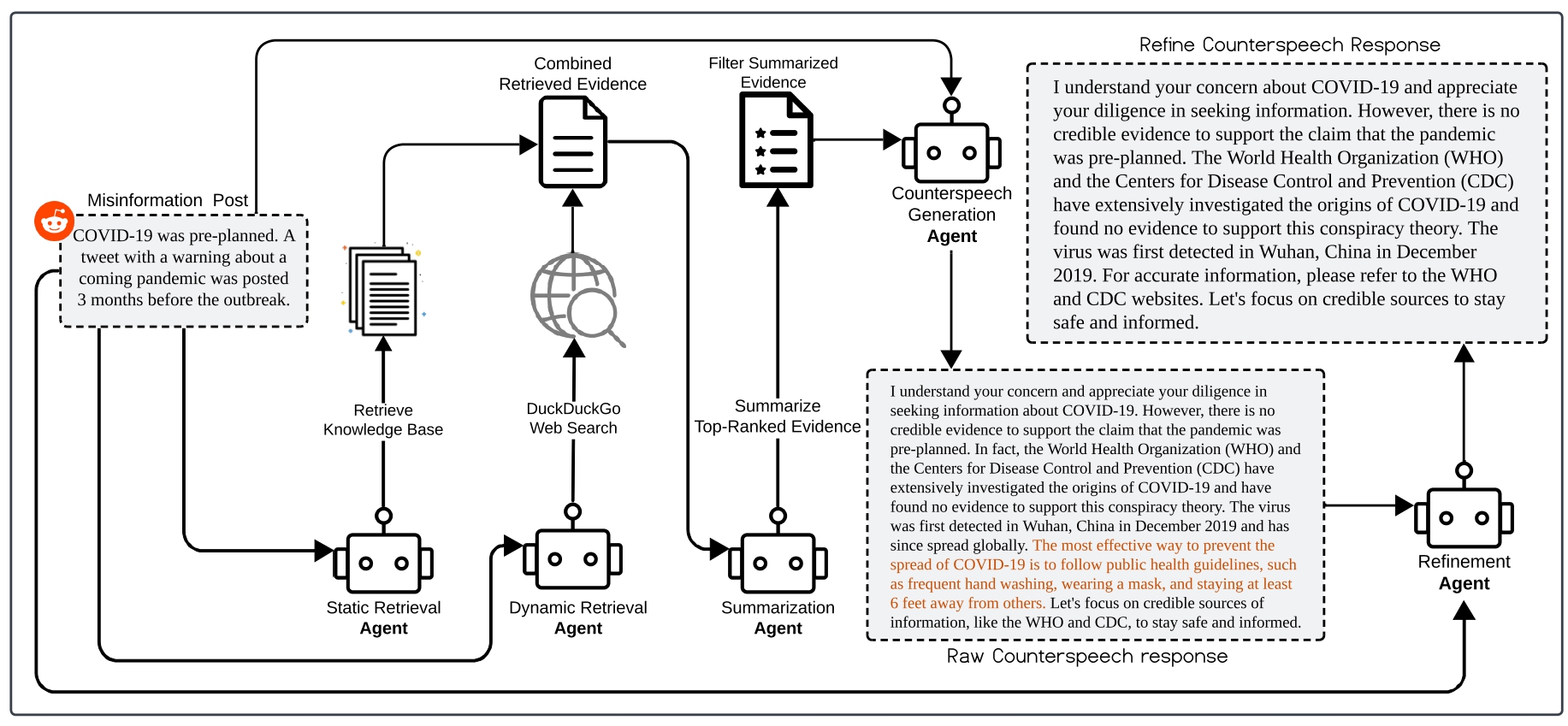

Figure 1: Overview of the multi-agent counterspeech framework.

- Static Retrieval Agent: Collects evidence from authoritative offline sources.

- Dynamic Retrieval Agent: Fetches real-time web evidence.

- Summarization Agent: Filters and ranks evidence for clarity and relevance.

- Generation Agent: Produces counterspeech grounded in summarized evidence.

- Refinement Agent: Improves clarity, politeness, and factuality.

This modular design enhances transparency, adaptability, and reliability in dynamic misinformation scenarios.

Experimental Results

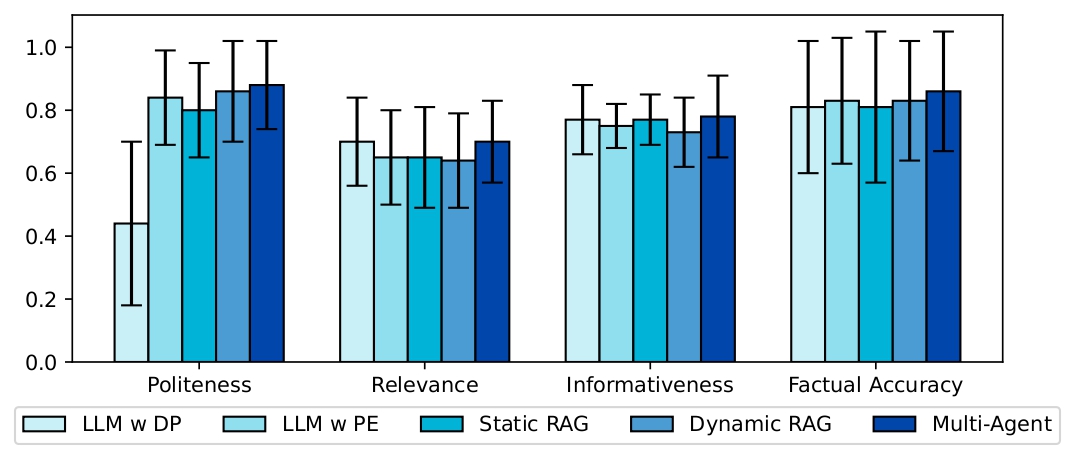

Figure 2: Comparison of our framework with baseline systems across Politeness, Relevance, Informativeness, and Factual Accuracy.

Our multi-agent approach consistently outperforms static RAG, dynamic RAG, and prompting-only methods. Error bars denote annotator standard deviation.

Resources

- 📄 Paper: arXiv Link

- 📂 Dataset: Health Misinformation Reddit Dataset

BibTeX

@inproceedings{

anik2025multiagent,

title={Multi-Agent Retrieval-Augmented Framework for Evidence-Based Counterspeech Against Health Misinformation},

author={Anirban Saha Anik and Xiaoying Song and Elliott Wang and Bryan Wang and Bengisu Yarimbas and Lingzi Hong},

booktitle={Second Conference on Language Modeling},

year={2025},

url={https://openreview.net/forum?id=P61AgRyU7E}

}

Recommended citation: Anirban Saha Anik, Xiaoying Song, Elliott Wang, Bryan Wang, Bengisu Yarimbas, Lingzi Hong. (2025). "Multi-Agent Retrieval-Augmented Framework for Evidence-Based Counterspeech Against Health Misinformation." Conference on Language Models (COLM 2025).

Download Paper